If the last decade of social media was shaped by user-generated content and the promise of connecting the world, the next one is being shaped by algorithms that quietly decide what we see, when we see it and why. But how they make those decisions? Still a black box. Transparency hasn’t caught up.

As AI becomes even more embedded in our everyday tech, from personalized search to smart home devices to the next generation of AI-native browsers and operating systems. These tools don’t just help us find information, they shape how we access and interact with it. And behind it all are invisible decision-makers influencing what we see, without much accountability.

We’re not just talking about YouTube recommendations or who pops up first in your Instagram feed anymore. We’re talking about AI deciding what information you need before you even ask for it and filtering the entire internet through a black box you’ll never have complete access to.

That’s a problem.

The Algorithm Isn’t Coming. It’s Already Here.

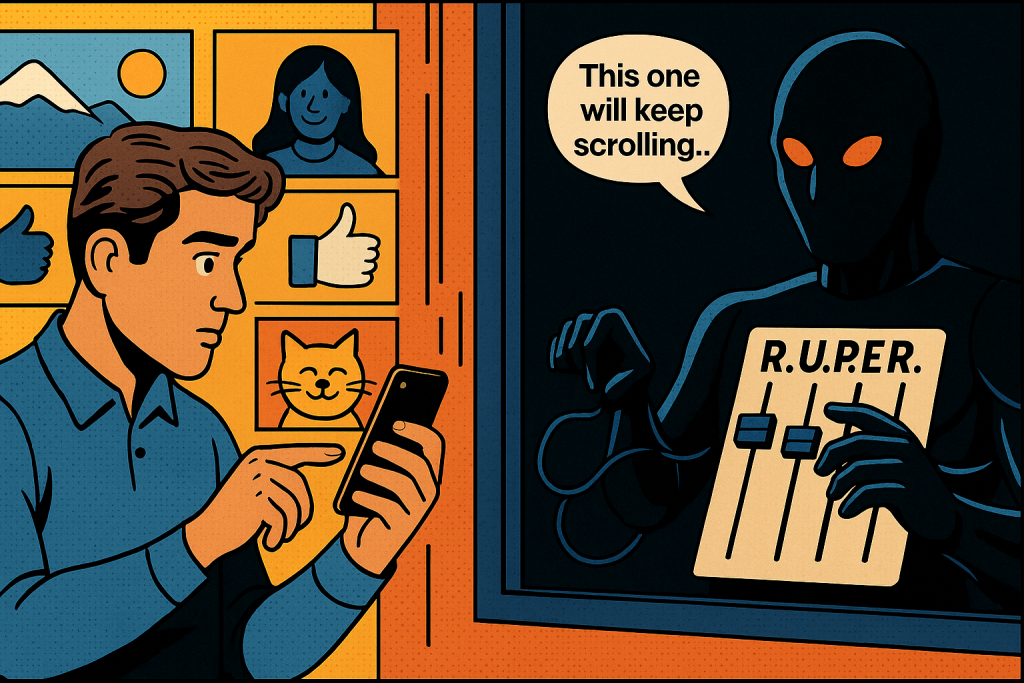

When we say “algorithm,” what we really mean is a set of rules, usually written by engineers and refined by machine learning models, that sorts and prioritizes content based on certain goals like recency, user satisfaction, profit, engagement and relevance. These models learn from what we click on, how long we hover, what we skip, etc. And they adapt constantly.

Take TikTok’s For You page. It doesn’t just react to your interests, it actively shapes them. Studies have shown TikTok can identify your preferences in as little as 30 minutes of usage.

Honestly not too surprising. Pre-AI recommendation engines could infer interest from simple behavioral signals like dwell time or clicks. What has changed is:

- Scale: AI can now parse signals from billions of interactions across modalities (video, audio, text, etc.) in real time.

- Speed: It can lock onto emerging patterns in minutes, like that 30-minute TikTok session.

- Subtlety: AI doesn’t just notice you like dogs, it can infer you prefer golden retrievers in nature videos with acoustic background music and no narration.

So it’s not based on what you say you like, but what your behavior betrays. That dopamine rush you get from one viral video? The algorithm notes it and gives you more. Before long, your world has been curated in a way that may feel organic, but under the hood, it’s still driven by Recency, User satisfaction, Profit, Engagement and Relevance, better known (to absolutely no one) as RUPER.

And now AI is accelerating that shift.

AI Will Supercharge the Filter Bubble

As large language models and generative AI get pulled deeper into consumer devices, we’re entering a world where algorithmic decision-making isn’t just about showing you content. It’s about creating it, summarizing it, contextualizing it, even editorializing it. AI won’t just decide which articles to show you, it’ll package it up into something pretty made just for you.

Helpful Tip

Even AI assistants can subtly reinforce your biases or emotional state. Always ask, “Why is it responding this way?”, especially when it feels too agreeable.OpenAI recently addressed this in their post on sycophancy in AI models.

Google’s Search Generative Experience (SGE) is already blending traditional search with AI overviews, putting algorithmically generated answers front and center, sometimes pushing organic results far down the page. That means the source of your information isn’t just being filtered, it’s being synthesized, remixed and in some cases anonymized.

The result? You’re no longer consuming content from people or organizations. You’re consuming interpretations created by machines, shaped by proprietary rules you’ll never get to see.

Algorithms Aren’t Inherently Bad

To be clear, RUPER algorithms are not the villain. They’re essential. Without them, modern digital platforms wouldn’t scale. You’d drown in a sea of irrelevant content. Smart curation is necessary to make sense of the overwhelming flood of data online and honestly … many of these algorithms do make our lives better.

Spotify’s AI DJ is actually pretty good and an easy go-to for when I just want music with nothing specific in mind. Netflix’s “Because You Watched” suggestions, not always spot-on, but does surface stuff I’d probably never find on my own. Google Maps rerouting around traffic? Really cool, although I wish they’d let you turn it off sometimes.

The issue is opacity.

The average user doesn’t know why their feed looks the way it does. And as algorithms become more sophisticated and personalized, especially with AI in the mix, they become harder to interrogate. Why did that news story show up first? Why did that person’s post disappear? Why do I never see dissenting opinions anymore?

The main culprits are the algorithms behind feeds like Facebook’s News Feed, YouTube’s recommendations, TikTok’s For You page and even Google’s featured snippets. Each one optimizes for engagement, recency or relevance, but rarely shows its math. The result is an experience that feels tailored while quietly narrowing your perspective.

We’re being influenced in subtle, pervasive ways. And if we don’t demand transparency now, it might be too late later.

Why the Culture Needs to Catch Up

There’s a growing narrative that AI is making us dumber or that algorithmic feeds are eroding our ability to think critically. But that gives the tech too much credit and lets everything else off the hook.

The truth is, these tools didn’t create the problem, they just revealed how unprepared we are for them. Our education systems, media habits and even workplace structures were designed for a different era where information was slower, narrower and far less interactive. We’ve needed a reset for a long time.

Now we have tools that can accelerate learning, automate routine tasks and unlock new creative possibilities. But instead of adapting, we’re still approaching them like distractions … outsourcing too much and asking too little.

Generative AI isn’t inherently the enemy of thought. If used intentionally, it could help build a more curious, self-directed culture. But only if we stay aware of how these systems shape what we see and how we think.

That’s why algorithmic transparency matters. It’s how we become active participants instead of passive consumers.

The Hidden Cost of Personalization

What feels convenient at first can quietly narrow your perspective.

Facebook learned this the hard way during the 2016 election. Their algorithm prioritized engagement and the most engaging content was often the most polarizing. That same pattern plays out across Twitter, YouTube, Reddit, TikTok. The more you engage with one side of an issue, the more you’re fed similar content, reinforcing your perspective while hiding opposing views.

Now imagine that same dynamic applied not just to your social feed, but to your AI assistant, your search engine, your AR glasses. A world where every experience is curated to your past behavior and emotional triggers.

Helpful Tip

If you’re only seeing what you agree with online, it’s probably not by accident. Curate your own digital diet, don’t just consume what’s served.Try searching for opposing viewpoints intentionally. I built a few AI tools to help me with this.

Why Transparency Needs to Be Non-Negotiable

There are two types of transparency we need from these platforms:

- Algorithmic transparency: Platforms should provide clearer explanations of how algorithms work, what signals they prioritize and how they adapt over time. This doesn’t mean giving away trade secrets, but it does mean giving users a meaningful understanding of the system.

- Outcome transparency: Users should be able to see why specific content was recommended. What factors contributed? Can they adjust the weight of those factors? Can they opt out?

This is especially urgent as platforms like Meta, Google and OpenAI move toward more proactive AI integration into everyday browsing, shopping, researching and messaging. If the next generation of user interfaces is largely AI-driven, we need guardrails that protect user agency and visibility.

What We Can Do About It

Push for clearer controls and better customization. Platforms should offer more than just opt-in vs. opt-out, users deserve real options to shape their own experience.

Support regulation that promotes transparency and accountability, without crossing into top-down content enforcement. Efforts like the EU’s Digital Services Act and the U.S.’s Algorithmic Accountability Act are steps in the right direction, but they’ll need refinement and public pressure to strike the right balance.

Build and use ethical AI tools. If you’re developing software or integrating AI into your business, make transparency part of the design process from the start. It’s not just good practice, it’s better UX.

And stay informed. The more we understand how these systems work, the better we can question them, shape them and avoid being passively shaped in return.

A Note on Trade-offs

Of course, transparency isn’t a silver bullet. Too much detail can overwhelm. Too little and it’s meaningless. Make it too open and bad actors will game the system. Make it too closed and users lose trust.

But these are design problems, not deal-breakers.

With the right defaults, layered explanations and real, not performative, controls, we can build systems that are both powerful and responsible. Transparency doesn’t mean giving away the algorithm. It means giving users a say in how it shapes their world.

It won’t be perfect. But it’s better than what we have today.

Who’s Really Choosing What You See?

We’re at an inflection point. The RUPER algorithms that once quietly shaped our feeds are now evolving into the default gatekeepers of knowledge, communication and perception. With AI rewriting and reordering what we consume, understanding the origin and intent behind content is no longer optional.

This isn’t about suppressing free speech or dismantling personalization. It’s about understanding influence. Who’s curating your world? Who’s filtering your options? And what are they prioritizing when they make those decisions?

If we don’t start demanding answers now, we might wake up to find that our sources have disappeared behind an AI-generated veil, that looks and feels real, but serves someone else’s agenda.

What a Transparent, AI-Driven Future Could Look Like

A future built on algorithmic transparency doesn’t mean every user becomes a data scientist. It means the defaults get better.

You don’t need to study every signal feeding your feed, you just need the option to peek under the hood when something feels off. You don’t need to manage every setting, you need presets that reflect real values: diversity of thought, reduced manipulation, user agency.

Realistically, that future could include:

- A toggle that lets you switch between “most engaging,” “most recent,” and “most diverse” views in your feed, no guesswork.

- An AI assistant that not only summarizes content, but also flags potential gaps or missing perspectives. Labels on synthesized answers showing where the data came from and where it didn’t.

- A history of how your preferences have evolved with the ability to reset or rewind.

- A “why am I seeing this?” button that actually tells you, without legalese.

None of that breaks the system. It makes it better. More accountable. More aligned with how people actually want to use technology.

This future doesn’t hand over control to the user completely. But it makes the influence visible. It restores a sense of shared authorship between human and machine.

That’s not just transparency for transparency’s sake. It’s how we preserve choice in an increasingly automated world.

Because the question isn’t whether algorithms will shape our reality.

It’s whether we’ll still have a say in how they do it.