I’ve been using ChatGPT to write code since 3.5 and most recently have been leaning heavily on the 4o model. I’ve also experimented with o1-preview and 4o with canvas. Whether it’s setting up build pipelines, generating code from scratch or refactoring large blocks of code, ChatGPT has changed everything about how I work and write code.

This post is about my personal experiences with coding alongside ChatGPT. From leveraging models like 4o-mini for smaller tasks to experimenting with canvas features, I’ll share some tips from my experience so far.

Why GPT-4o is My Go-To Model for Coding

In my day-to-day coding work, GPT-4o has become my mainstay. I use it for almost everything code-related, whether I’m building new features, refactoring old code or debugging errors. It feels like an upgrade over GPT-3.5 in almost every way, especially for coding.

Key reasons why I stick to GPT-4o:

- Context handling: GPT-4o shines when it comes to maintaining context over longer conversations. I like to push the token limits by feeding it large codebases, something that 3.5 struggled with due to its tighter constraints.

- Precision: GPT-4o’s accuracy in generating code is much better that previous generations. Whether it’s understanding complex logic, recognizing patterns in my code or generating new functions, the model stays coherent. I can feed it complex problems and it’s rare for it to get confused or give me something completely off.

- Speed: GPT-4o is fast. Maybe not as fast as some of the older models, and sometimes it can slow down due to server issues, but when its going it can really get going. To speed things up even more, I’m very specific about how I want ChatGPT to respond to my requests to help keep them short and efficient.

- API Integration: When working with APIs, I use the 4o-mini model to keep costs low and it handles smaller, more modular tasks well. For larger API runs, I sometimes use gpt-3.5-turbo-1106, though I’m cautious about running into token limits.

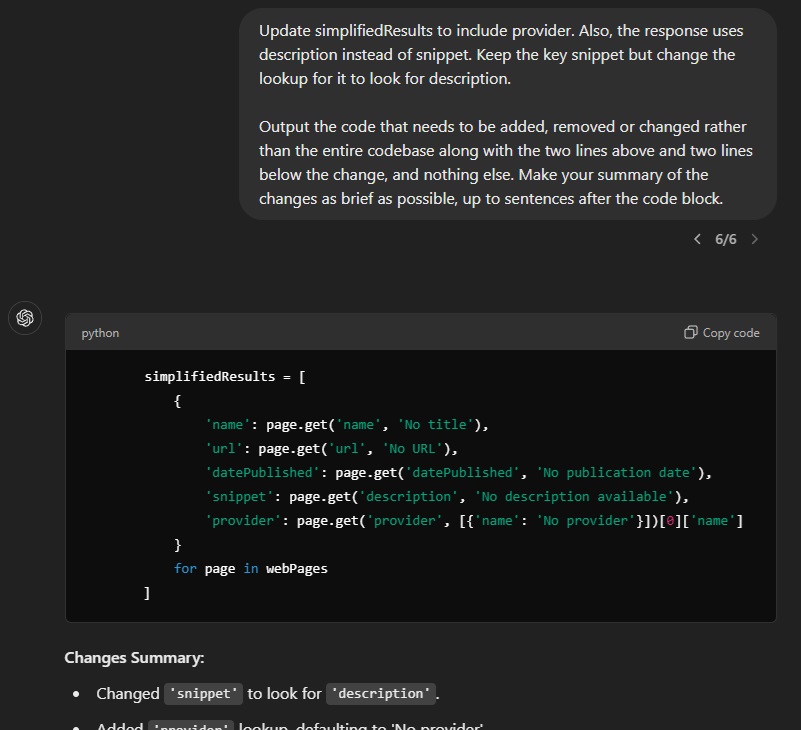

Practical Tip for Developers:

Helpful Tip

Ask GPT to output only the necessary changes, including just two lines above and below the changed code. This way, you keep your response times short and workflow fast.Efficiency improves as you iterate quickly with shorter outputs.

This level of control over the model’s output ensures I can integrate changes quickly without waiting for long, repetitive responses.

GPT-4o with Canvas Mode

I’ve also been experimenting with the Canvas mode in GPT-4o. At first glance, it seemed like an incredibly useful tool that could provide a visual, real-time editing experience. However, my time with it has been a bit bumpy. I’m going to wait until the product matures a bit more, but here’s what I’ve encountered so far:

My Issues with Canvas Mode:

- Unnecessary changes: One of the biggest frustrations I’ve had with the canvas feature is that it often changes code that doesn’t need to be touched. It seems to oversimplify or modify existing code, likely to create space for new code rather than just appending what I asked for.

- Losing track of updates: I’ve noticed that when I make manual updates to the code within the canvas, the model tends to revert to older versions of the code. It seems unaware of the changes, often leading to me having to remind the AI of the updates I’ve made manually.

- Reverting to chat output: Sometimes, rather than updating the code in the canvas, it goes back to regular chat mode and starts outputting code there. This breaks the flow of what canvas mode is supposed to accomplish.

- Writing instead of answering: If I ask it a question about the code while in canvas mode, it’s more likely to try and write more code instead of providing a simple explanation.

Practical Tip for Developers:

Helpful Tip

When using Canvas mode, be specific about where code should go and what parts should remain untouched. If you’re making manual changes, reinforce those updates with clear reminders to the model.Guiding the model helps maintain clarity and accuracy in collaborative editing.

While I’m still hopeful canvas mode can evolve into a more seamless coding tool, at the moment, it feels more experimental than reliable for larger projects.

Exploring o1-Preview

When I really want to push the limits of what GPT can do, o1-preview has been a great experience. While I’m limited in how often I can use it, I reserve it for particularly complex or creative tasks. This model definitely feels like the future of AI coding assistants. But there are trade-offs.

Strengths of o1-Preview:

- Creative problem-solving: If I’m looking for an out-of-the-box solution or have a unique challenge that requires a lot of thought, o1-preview excels. It dives deeper into problems than 4o, often returning more thoughtful and creative approaches.

- Precision with complexity: For more complex builds, such as when I’m dealing with intricate API interactions or trying to optimize specific algorithms, o1 has been incredibly effective.

Weaknesses of o1-Preview:

- Speed: o1-preview can be slower than I’d like. It sometimes seems to get stuck in a recursive loop, where it’s rethinking the problem multiple times, leading to longer wait times for outputs that don’t always match the complexity of the task. I’ve found that if I know what I need, I stick with 4o and only turn to o1-preview for the more creative tasks.

- Understanding: While one of the touted benefits is the model’s ability to take simple requests and truly understand them to the point where you shouldn’t have to provide too much additional context, I’ve personally not found this to be the case. I still need to provide very clear and detailed instructions to fully leverage the models reasoning capabilities.

Practical Tip for Developers:

Helpful Tip

Use o1-preview for complex creative tasks where the solution isn’t straightforward. However, if you know exactly what you need, 4o will usually get the job done faster and just as effectively.Until the model evolves, save time by reserving o1 for the most challenging problems.

When GPT Makes Assumptions

One area where ChatGPT shines for me is refactoring code, but it does have a tendency to make assumptions. If I’m working with legacy code or using third-party libraries, ChatGPT can sometimes “overcorrect” and break things by updating code that’s already working fine.

Here’s how I avoid that:

- Be direct: I always tell ChatGPT that certain parts of my code work perfectly as-is. A simple instruction like “This code works perfectly, do not make assumptions about it. Just refactor as-is” often does the trick.

Practical Tip for Developers:

Helpful Tip

When refactoring, clearly instruct ChatGPT to not change working code. This ensures the model sticks to the parts of the code that need attention and doesn’t accidentally introduce issues.Refactoring works best when the model is focused on the right sections of code.

Handling Debugging with ChatGPT

Debugging can be hit or miss. While GPT-4o generally gives accurate solutions, I’ve found that sometimes ChatGPT offers a fix without fully understanding the provided context, particularly with error messages. For example, if I share some JavaScript and a related console error, it occasionally generalizes the issue, recommending fixes that are irrelevant.

To resolve this, I’ve learned to break down the problem step by step for ChatGPT:

- Share the exact error message.

- Explain what the error actually means in the context of the provided code.

- Ask ChatGPT to take that into consideration before offering a solution.

Practical Tip for Developers:

Helpful Tip

If you’re debugging and getting incorrect solutions from ChatGPT, break down the error and guide the model to the root of the problem. It will help you get more accurate fixes.Clarifying the problem saves you time and avoids chasing the wrong solution.

Recent Use Cases

I’ve been using ChatGPT to write code for so long a may write more posts in the future with more tips and techniques. The way it integrates into my workflow has evolved over time and I’m constantly discovering new ways to use it more efficiently. For now, I’ll just share some of my recent use cases to give you a snapshot of how it’s been helping me lately.

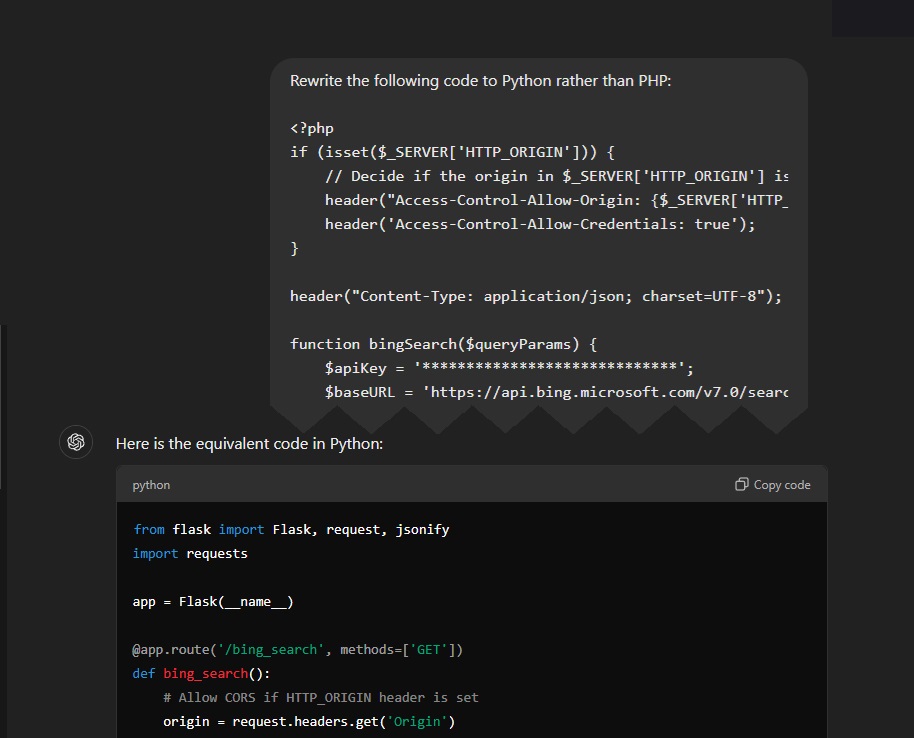

Changing Languages

One of my favorite use cases with ChatGPT is when I need to convert code from one language to another. For me, old habits die hard and I still like to prototype quick web apps in PHP when I want to bang out an idea. But once I’m ready to take the project further, I’ll paste the code into ChatGPT and have it translate the entire app into my preferred language for the final build.

ChatGPT handles this translation really well, adjusting syntax, functions and handling language-specific quirks. This way, I don’t waste time rebuilding an idea from scratch.

Duplicating Code Bases

Another standout use case is duplicating codebases. Recently, I wrote code to pull data from a specific source, run it through an LLM and then format it for database storage. Once that core logic was functioning well, I pasted the entire code block into ChatGPT and asked it to rewrite everything for a different data source.

It updated API calls, handled the different data responses and adjusted the OpenAI calls accordingly. I was able to replicate this for six additional sources in around 30 minutes, a task that would have easily taken hours otherwise.

Rapid Prototyping

For rapid prototyping, ChatGPT has saved me countless hours. In one project, I was modifying an array of data points and wanted a way to visually manipulate them. I pasted the entire codebase into ChatGPT and instructed it to create a standalone tool to allow me to adjust the data points using a canvas element.

In just a few iterations, I had a working prototype that let me manipulate the array visually, outputting the JSON array of points that I could then paste back into the original project. This saved me from manually tweaking data points and constantly refreshing the experience to confirm placements.

Code Smarter, Not Harder

Working with ChatGPT to write code has been an evolving process and with each iteration of the models, I’ve found new ways to streamline my workflow. While GPT-4o handles most of my daily coding tasks, I’m excited to see how o1-preview develops and if future versions of Canvas mode will address some of its current shortcomings.

If you haven’t tried integrating ChatGPT into your coding workflow yet, I’d recommend starting small, ask it to help you with commit messages or refactor a small piece of code. As you get more comfortable, you’ll find that it can help with even the most complex tasks, freeing you up to focus on the creative aspects of development.

Next step for me is to start exploring the current state of VS Code extensions. I’ve been holding back because my current workflow with ChatGPT has gotten me pretty far. But, I know it’s inevitable.