In my last post, I shared a few tools I’ve been building as part of a new section on my site, Jon Bishop’s AI Projects. These are small, self-contained apps powered by GPT and built using a one-page architecture. No frameworks, no dependencies, just quick experiments with purpose.

Most of the first batch leaned toward creative or marketing-focused ideas. But like a lot of people, I’ve been watching the world get more polarized, more chaotic and honestly, just more confusing. Social media algorithms are optimized for engagement, not clarity. Nuance gets flattened into tribal headlines. AI bots amplify bad information faster than we can even react. It’s a mess.

I like to stay informed and while there are some excellent tools and platforms already tackling this space, I wanted to explore it for myself. Not to reinvent the wheel, but to better understand how AI might support critical thinking, belief mapping and truth detection, at least in small, local ways.

Here are three new tools I’ve added to the collection, built around that idea.

Belief System Chat

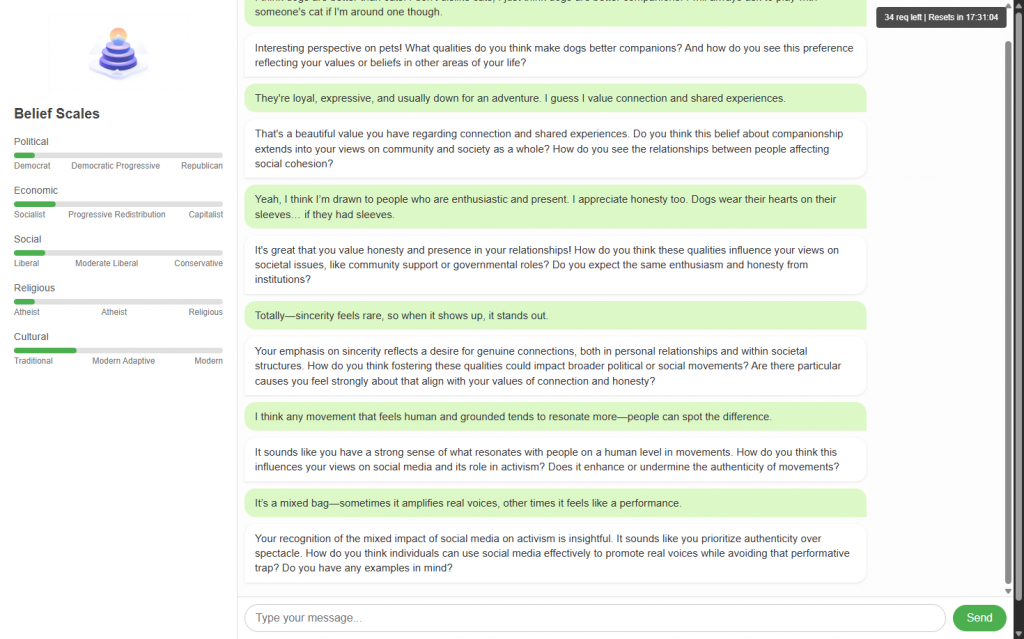

This was one of the first AI tools I built using my one-page app framework and OpenAI proxy setup. It’s called Belief System Chat and it started with a personal question: could an AI chatbot help me explore what I actually believe?

Not what I say I believe in conversation. Not what a label assigns me. But the foundational stuff. The things shaped by both experience and bias, education and emotion.

I’m someone who prefers person-to-person dialogue. I rarely jump into online debates, mostly because the format just doesn’t lend itself to meaningful discourse. But I do have a belief system, anchored in things I try to verify, but also shaped by a lot of unconscious nurture. And like many people, I struggle with the labels. Am I center-left socially but libertarian economically? How much of my cultural identity is tied to belief or just habit?

So I built a conversational interface where you chat with the AI like you would with a thoughtful friend. As the conversation progresses, your belief positions are mapped across five dimensions:

- Political

- Economic

- Social

- Religious

- Cultural

Each has a value from 1 to 100 and labels for the extremes. You might start off moderate on one issue and slowly shift as you dig deeper.

The backend continuously updates two things:

- A belief overview, a running paragraph that summarizes what you’ve expressed.

- A set of belief scales, each updated with your current position and the reasoning behind it.

Helpful Tip

Use the same browser to continue your conversation over multiple sessions. Everything is stored locally so you can pick up where you left off.No login, no data saved server-side.

This isn’t trying to tell you what to believe. It’s more of a mirror, one that adapts as you think out loud.

Belief Splitter

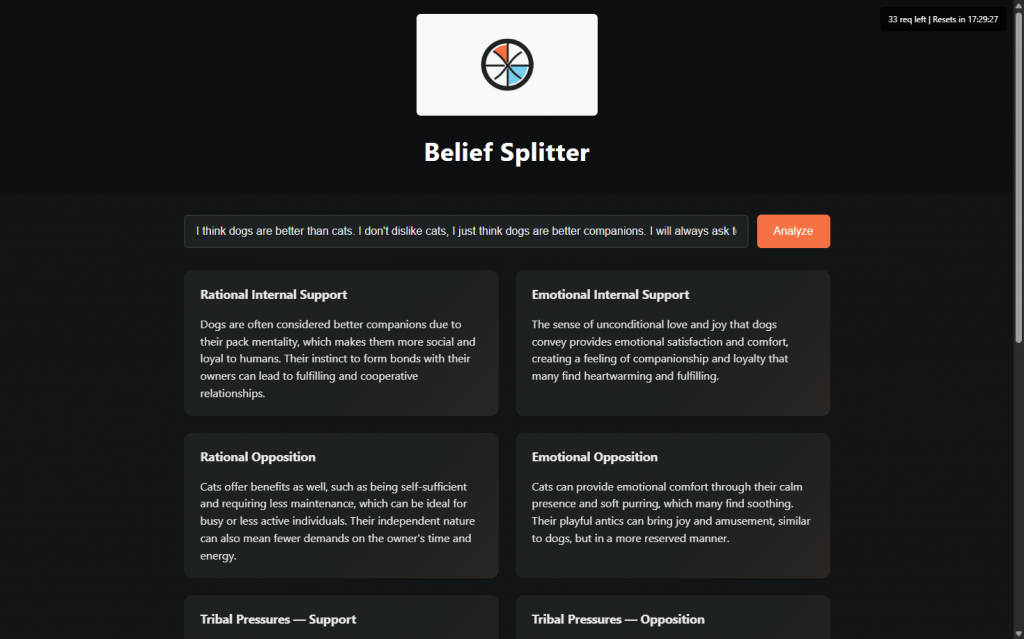

This one evolved out of a frustration I kept having while reading news and debates online. I’d see a statement like “We need stronger borders” or “Healthcare is a human right” and immediately feel the tug of associations, news channels, political parties, personal experiences, etc. That tangle of reactions makes it hard to tell: do I agree with the idea itself or with the context it comes wrapped in?

Belief Splitter is a little cognitive dissonance explorer. You feed it any belief, doesn’t matter if it’s political, cultural, moral or otherwise and it pulls the idea apart into six areas:

- Rational internal support

- Emotional internal support

- Rational opposition

- Emotional opposition

- Tribal pressures for

- Tribal pressures against

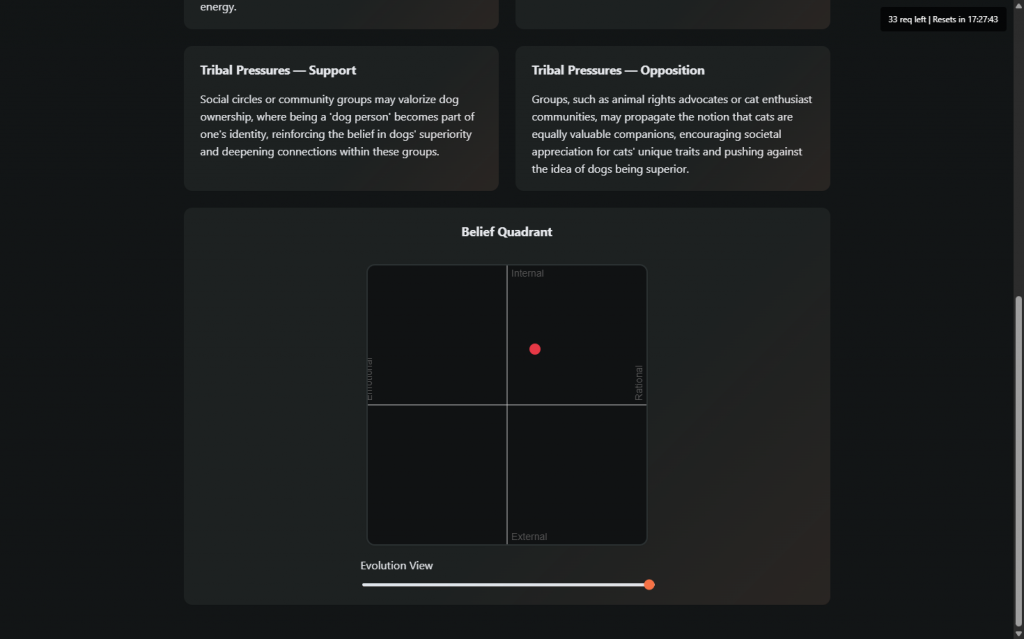

Then it places the belief on a two-axis chart:

- Rationality (emotional ↔ rational)

- Internality (external pressure ↔ personal conviction)

The goal isn’t to tell you whether a belief is right or wrong. It’s to visualize where the weight of your reasoning is coming from.

Like everything in this AI Projects section, it’s a one-page app. No accounts, no servers, just client-side analysis via a lightweight OpenAI call.

AI Story Validator

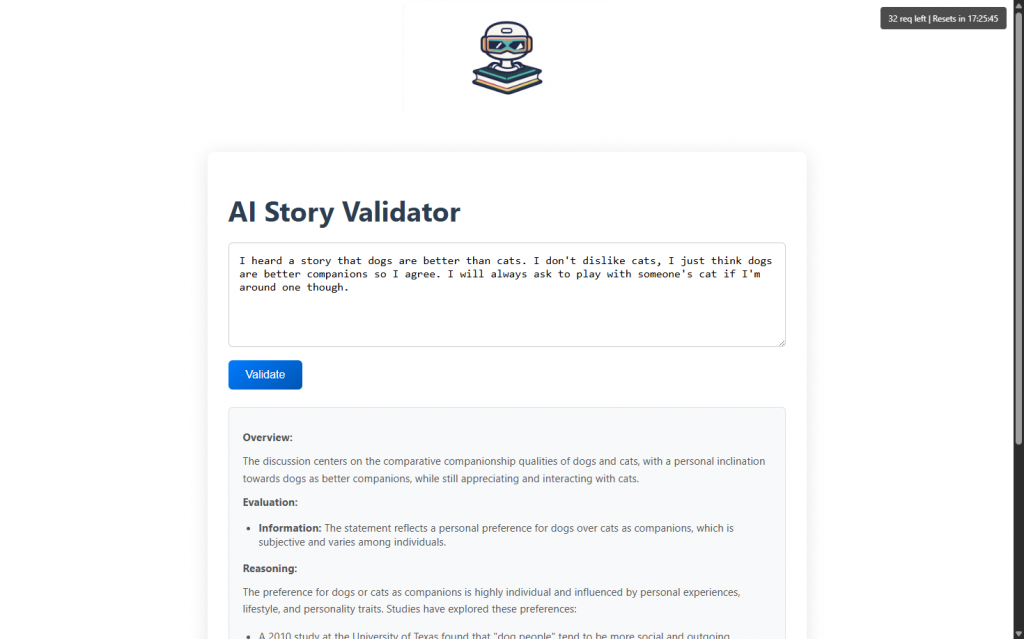

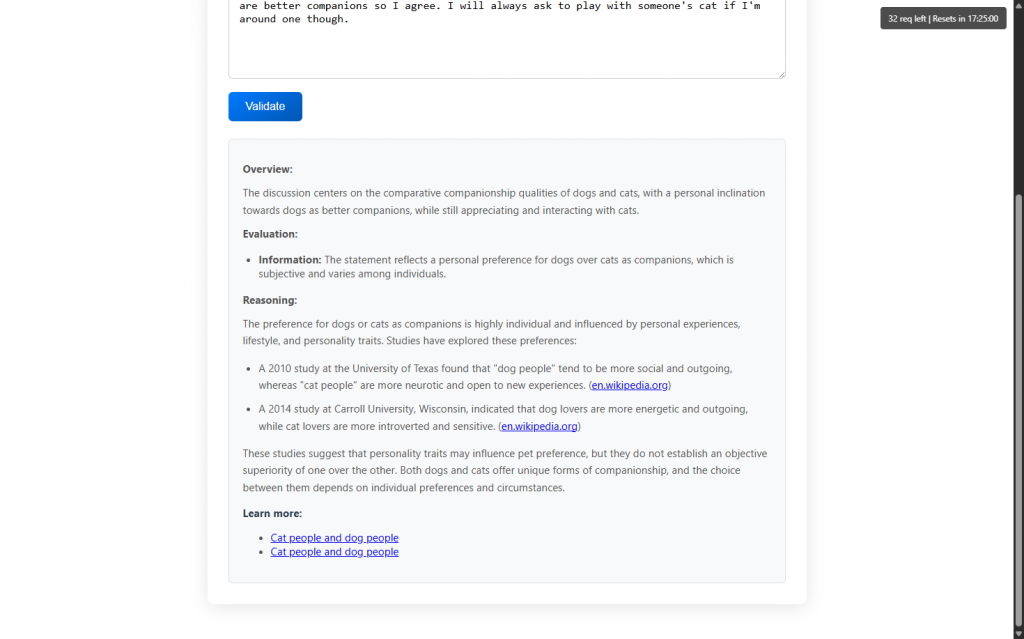

This last one was mostly an excuse to play with OpenAI’s new gpt-4o-search-preview model. It can perform real-time web searches and return citations, which makes it a perfect sandbox for testing truth detection.

The tool is pretty simple:

- Paste in any chunk of text (URLs optional but encouraged)

- It scrapes the content of any links included

- It feeds everything into GPT-4o with a prompt designed to separate signal from noise

- The model returns:

- A truthfulness score: Information, Misinformation or A Little Bit Of Everything

- An overview of the story

- A reasoning section that explains why the model came to that conclusion

- Occasionally, citations pulled from real search results

It’s not bulletproof. And it’s definitely not meant to replace real fact-checking tools. This one’s more of a utility, something you can toss a sketchy post into and get a gut check.

Final Thoughts

None of these tools are trying to be the best at what they do. They’re just small experiments, ways for me to explore what generative AI can do when the goal isn’t output, but understanding.

I like building these things because they help me think. They help me see my blind spots. They make abstract ideas feel a little more tangible. And they keep me coding in a way that feels connected to the world, not just the tech.

I’ll keep adding to the AI Projects section as new ideas come up, especially the ones that feel like they belong more in a workshop than a marketplace. If something here resonates or inspires you to build your own version, I’d love to see it.

Until next time, stay curious.